How I discovered the power of trigrams for text search in SQL Server

I was recently tasked with the migration of a report which accidentally led to an exciting journey where I discovered the power of trigrams. So, grab a cup of tea, come along, and lets dive right into it.

The assignment:

I should first explain that this migration report piece was part of a bigger project. The end goal was to remove any direct dependency between our monolithic databases and internal/external reporting. This means using our enterprise data warehouse as source of truth instead.

Like most companies out there, our back end is constantly evolving, driven by emerging frameworks and design patterns. As we are moving towards a serverless architecture, it is imperative we decouple OLTP from reporting, to make the transition easier.

Hopefully, this has given you some context and we can now continue with the report itself.

Questions before the migration:

-

What is the purpose of the report?

The report allows users to search for specific terms (including inflections) in multiple text-based columns, linked to a series of products we offer.

-

How is the report used?

Before migrating an existing feature or product, it is worth checking how users interact with it and what issues they face whilst using it. In this case, it seemed most users did not understand the full text search filters available in the report and complained about errors being thrown, inaccurate data and an overall slow response.

-

How is the data surfaced in the report?

There is a daily job which pulls the text-based data from different OLTP databases and pushes that data into a utility/reporting database with full-text search enabled. The report itself gets the data from that utility/reporting database and OLTP databases to return the results.

Asking those questions is important when working on a migration piece. You want to make sure you keep the same functionality whilst fixing any potential issues. It is a good opportunity to revisit the solution, as long as it is scoped properly, and deadlines are met.

After answering those questions, I realised that the current report had the following issues:

- Only new text-based data is added to the utility database. Updates are not taken into consideration.

- The report is not showing accurate operational data due to backend changes since implementation.

- The filters available in the report are not easy to understand, and full-text capabilities are not being used.

- The response time is not optimal, due to data being pulled from OLTP databases and full-text search performance caveats.

- The stored procedure behind the report is pulling data from different databases, creating unnecessary dependencies.

By this point I had all the information I needed to start working. I just needed to weight out my options.

Text Search options:

Before going through all the options available in SQL Server, I just want to quickly say that I discarded other options like ElasticSearch right away due to time restrictions.

When it comes to searching string data in SQL Server, there are typically 2 different approaches, depending on the requirements:

-

Use wildcard search queries:

where column like ‘%search criteria%’.Pros: The LIKE operator is quite flexible, handling multiple types of search (begins with, contains, ends with), and allowing multiple wildcards.

Cons: Can be slow and resource intensive, specially when the LIKE operator starts with a wildcard. i.e. LIKE ‘%word’, turning our predicate in non-sargable,

-

Leverage Full-Text Search linguistic matching capabilities.

Pros: Full-Text search is very powerful and offers features such as stemming, weighted results etc.

Cons: Full-text search does not integrate well in query plans. Performance can be severely impacted when combined with other filters in a query.

From the get-go, it seemed that these 2 options were not optimal. So I kept looking, and I found this exceptionally well written article by Paul White, about trigram wildcard string search. Trigrams was a perfect fit as it allowed for simple wildcard search terms, whilst helping boost performance.

Trigrams:

A trigram in the context of this article, is essentially a set of 3 continuous characters. I.e. in the word “Powerful” we can find the following trigrams:

-Pow, owe, wer, erf, rfu, ful

If you still don’t know how using trigrams can speed up your text searches, please bear with me.

The solution involves:

- Generating and persisting trigrams for all text-based search columns (target data).

- Generating trigrams for the search term used in the report and keep the 3 more selective ones.

- Match trigrams from the search term and the persisted data set

- Apply search term again against reduced dataset.

Let’s illustrate this with an example:

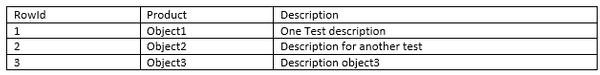

In our OLTP database we have a table with the following data:

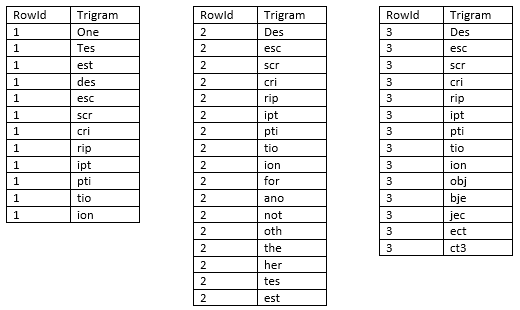

Step 1: We generate trigrams for the column “Description”, and remove duplicates before persisting the results. Note that trigrams with special characters or blanks are discarded.

Step 2: We generate trigrams for the search term. Let’s assume the user is interested in Products with description contain the text “Test description”.

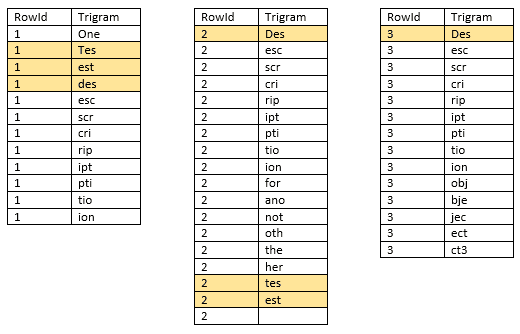

If the search term yields more than 3 trigrams, we use the three most selective ones. If we end up with three or less trigrams, we use all the ones available. In this case, we have got more than three trigrams so lets find out which ones are more selective:

- Tes (2 occurrencs)

- est (2 occurrences)

- des (3 occurrences)*

*All remaining trigrams have 3 occurrences so it will use the first one available.

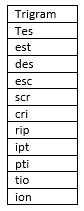

Step 3: We find records where we have a match with all three trigrams.

In this case, both products with rowId 1 and 2 are a match.

Step 4: Once we have all the records with matched trigrams, we are not done yet. Trigrams alone will potentially return more results than necessary as we are not taking into consideration the order of appearance in the target text.

Going back to our example, although we get 2 results, one of them is a false positive and that is OK. After all, trigram search is only supposed to reduce the size of your target dataset as much as possible. The search term “Test description” still needs to be applied to all rows matched using trigrams.

Our final query will still have a non-sargable predicate but it will be used against a much smaller dataset.

The implementation:

Once I knew what text search tool I wanted to use, it was time to put everything together.

As the article mentions, trigrams are great for simple search criteria and they can be very fast, especially when using highly selective filters. However, they require more initial groundwork.

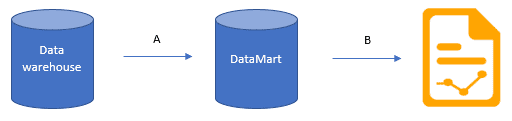

Based on the issues discussed earlier in this article, I decided to implement a new DataMart, which would include all the data the report needs. This solves dependency issues and helps with performance.

- New ETL to load all text-based columns and additional operational data needed in the report. Trigrams are generated for each text-based column and stored separately.

- Report runs a stored procedure which pulls data from the new Datamart only. Trigrams are used to reduce the number of results before applying the search term again with LIKE operator.